Datadog logs¶

By enabling this toolset, HolmesGPT will fetch pod logs from Datadog.

You should enable this toolset to replace the default kubernetes/logs toolset if all your kubernetes pod logs are consolidated inside Datadog. It will make it easier for HolmesGPT to fetch incident logs, including the ability to precisely consult past logs.

HolmesGPT provides several out-of-the-box alternatives for log access. You can select from these options:

kubernetes/logs: Access logs directly through Kubernetes. This is the default toolset.

coralogix/logs: Access logs through Coralogix.

datadog/logs: Access logs through Datadog.

grafana/loki: Access Loki logs by proxying through a Grafana instance.

opensearch/logs: Access logs through OpenSearch.

Configuration¶

holmes:

toolsets:

datadog/logs:

enabled: true

config:

dd_api_key: <your-datadog-api-key> # Required. Your Datadog API key

dd_app_key: <your-datadog-app-key> # Required. Your Datadog Application key

site_api_url: https://api.datadoghq.com # Required. Your Datadog site URL (e.g. https://api.us3.datadoghq.com for US3)

indexes: ["*"] # Optional. List of Datadog indexes to search. Default: ["*"]

storage_tiers: ["indexes"] # Optional. Ordered list of storage tiers to query (fallback mechanism). Options: "indexes", "online-archives", "flex". Default: ["indexes"]

labels: # Optional. Map Datadog labels to Kubernetes resources

pod: "pod_name"

namespace: "kube_namespace"

page_size: 300 # Optional. Number of logs per API page. Default: 300

default_limit: 1000 # Optional. Default maximum logs to fetch when limit not specified by the LLM. Default: 1000

request_timeout: 60 # Optional. API request timeout in seconds. Default: 60

kubernetes/logs:

enabled: false # HolmesGPT's default logging mechanism MUST be disabled

Update your Helm values (generated_values.yaml) with the above configuration and run a Helm upgrade:

helm upgrade robusta robusta/robusta --values=generated_values.yaml --set clusterName=<YOUR_CLUSTER_NAME>

Add the following to ~/.holmes/config.yaml, creating the file if it doesn't exist:

toolsets:

datadog/logs:

enabled: true

config:

dd_api_key: <your-datadog-api-key> # Required. Your Datadog API key

dd_app_key: <your-datadog-app-key> # Required. Your Datadog Application key

site_api_url: https://api.datadoghq.com # Required. Your Datadog site URL (e.g. https://api.us3.datadoghq.com for US3)

indexes: ["*"] # Optional. List of Datadog indexes to search. Default: ["*"]

storage_tiers: ["indexes"] # Optional. Ordered list of storage tiers to query (fallback mechanism). Options: "indexes", "online-archives", "flex". Default: ["indexes"]

labels: # Optional. Map Datadog labels to Kubernetes resources

pod: "pod_name"

namespace: "kube_namespace"

page_size: 300 # Optional. Number of logs per API page. Default: 300

default_limit: 1000 # Optional. Default maximum logs to fetch when limit not specified by the LLM. Default: 1000

request_timeout: 60 # Optional. API request timeout in seconds. Default: 60

kubernetes/logs:

enabled: false # HolmesGPT's default logging mechanism MUST be disabled

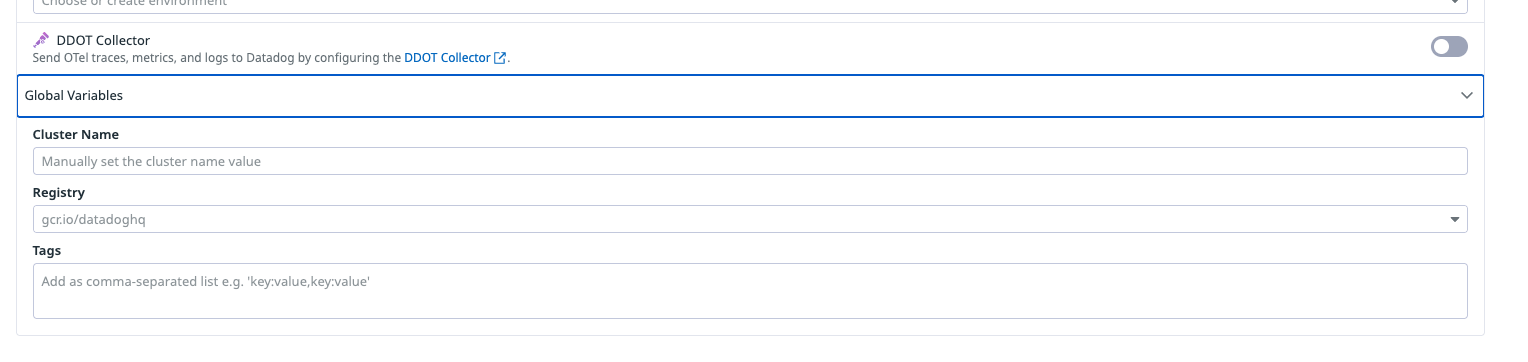

DataDog Kubernetes cluster label¶

In multi cluster environments, HolmesGPT will route alerts investigations to the Kubernetes cluster the alert is firing on.

Add the cluster label to your DataDog monitoring agent:

Getting API and Application Keys¶

To use this toolset, you need both a Datadog API key and Application key:

API Key: Go to Organization Settings > API Keys in your Datadog console

The API key must have the

logs_read_datapermission scopeWhen creating a new key, ensure this permission is enabled

Application Key: Go to Organization Settings > Application Keys in your Datadog console

For more information, see the Datadog API documentation.

Configuring Site URL¶

The site_api_url must match your Datadog site. Common values include:

https://api.datadoghq.com- US1https://api.us3.datadoghq.com- US3https://api.us5.datadoghq.com- US5https://api.datadoghq.eu- EUhttps://api.ap1.datadoghq.com- AP1

For a complete list of site URLs, see the Datadog site documentation.

Configuring Storage Tiers¶

Datadog offers different storage tiers for logs with varying retention and costs:

Storage Tier |

Description |

Use Case |

|---|---|---|

indexes |

Hot storage for recent logs (default) |

Real-time analysis and alerting |

online-archives |

Warm storage for older logs |

Historical log analysis |

flex |

Cost-effective storage |

Long-term retention |

The toolset uses storage tiers as a fallback mechanism. Subsequent tiers are queried only if the previous tier yielded no result.

For example if the toolset is configured with storage_tiers ["indexes", "online-archives"], then:

Holmes first runs a query using storage_tier

indexesIf there are no results at all, Holmes will then query

online-archives

Handling Rate Limits¶

If you encounter rate limiting issues with Datadog (visible as warning messages in Holmes logs), you can adjust the following parameters:

page_size: Reduce this value to fetch fewer logs per API request. This helps avoid hitting rate limits on individual requests.

default_limit: Lower this value to reduce the total number of logs fetched when no explicit limit is specified.

Example configuration for rate-limited environments:

toolsets:

datadog/logs:

enabled: true

config:

page_size: 100 # Reduced from default 300

default_limit: 500 # Reduced from default 1000

When rate limiting occurs, Holmes will automatically retry with exponential backoff. You'll see warnings like:

DataDog logs toolset is rate limited/throttled. Waiting X.Xs until reset time

Configuring Labels¶

You can customize the labels used by the toolset to identify Kubernetes resources. This is optional and only needed if your Datadog logs use different field names than the defaults.

toolsets:

datadog/logs:

enabled: true

config:

labels:

pod: "pod_name" # The field name for Kubernetes pod name in your Datadog logs

namespace: "kube_namespace" # The field name for Kubernetes namespace in your Datadog logs

To find the correct field names in your Datadog logs:

Go to Logs > Search in your Datadog console

View a sample log entry

Identify the field names used for pod name and namespace

Update the labels configuration accordingly

Disabling the Default Logging Toolset¶

The default HolmesGPT logging tool must be disabled if you use a different datasource for logs.

HolmesGPT may still use kubectl to fetch logs and never call your datasource if kubernetes/logs is not disabled.

To disable the default logging toolset, add the following to your holmes configuration:

holmes:

toolsets:

kubernetes/logs:

enabled: false

Update your Helm values (generated_values.yaml) with the above configuration and run a Helm upgrade:

helm upgrade robusta robusta/robusta --values=generated_values.yaml --set clusterName=<YOUR_CLUSTER_NAME>

Add the following to ~/.holmes/config.yaml, creating the file if it doesn't exist:

toolsets:

kubernetes/logs:

enabled: false

Capabilities¶

The table below describes the specific capabilities provided by this toolset. HolmesGPT can decide to invoke any of these capabilities when answering questions or investigating issues.

Tool Name |

Description |

|---|---|

fetch_pod_logs |

Retrieve logs from Datadog with support for filtering, time ranges, and multiple storage tiers |